These systems now create data in enormous quantities constantly. Proper management of this data ensures that problems are caught early and digital services stay up and running. Working through an observability pipeline is a valuable way to gather and use logs, metrics, and traces.

By doing this, the unimportant information is removed, and the system sends the critical data right away to where it is needed. If teams have a well-developed pipeline, they can easily and accurately use insights to protect the system.

What Role Does an Observability Pipeline Have?

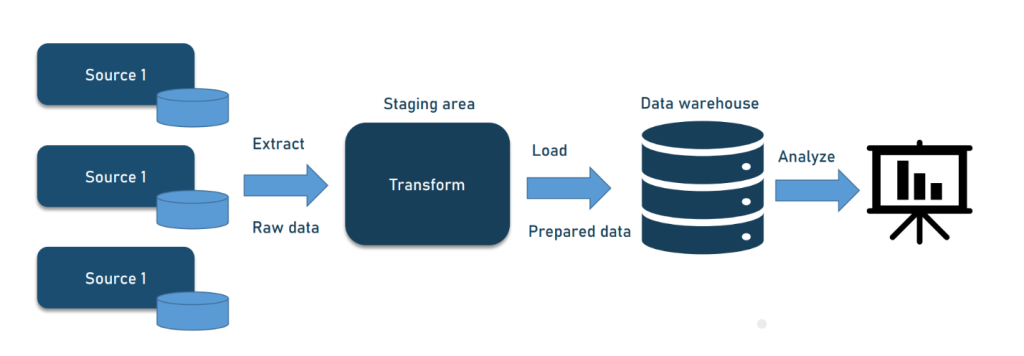

At the heart of it, an observability pipeline joins different stages to carry data from its beginning to the point where it is analyzed. Here’s how it works:

- Data Collection: Checks the telemetry coming from servers, applications, or services.

- Processing: The service tidies, standardizes, and enhances the data using proper tags or information.

- Routing: Moves the processed data into dashboards, alert systems, or set storage locations.

- Optimization: Lessens the amount of data needed by either compressing it or taking samples to keep costs down and improve the speed of the analysis.

This process ensures that the best and most important data is shared with the people in charge.

Why Is It Important?

If there is no proper pipeline, raw data will rise, making understanding the data much harder. This results in delays in solving problems, missing alerts, and paying more for services. If you follow a structured observability pipeline, you can benefit from the following:

- Faster detection of issues

- Clear patterns for troubleshooting

- Reduced strain on monitoring tools

- Lower storage and processing costs

It sorts out messy data so that valuable information can be retained and used to continue strong performance in digital systems.

Uses in Different Sectors

Many industries make use of observability pipelines in their practices.

- Retail: Continue watching the website to see if it is regularly available, and always check how smooth your checkout process is.

- Banking: Look for anything unusual in your transactions or anything that slows down the system.

- Healthcare: Avoid anything that may cause a break in the use of either device or platform.

Observing such systems as they happen in real life proves to be very important.

What Are the Common Challenges?

While a pipeline does its job, building it can be challenging. Examples of the main ones are:

- Inserting information from different systems

- Growing capacity as the amount of data increases

- Following rules and guidelines for protecting confidential information

On the other hand, modern engineering allows for building pipelines that suit both small and large systems alike.

Moving Forward with Observability

As digital platforms expand, taking care of data will only become more critical. Future observability tools may apply AI and machine learning to handle problems before they result in issues. The systems will aid in edge computing, meaning real-time data processing is essential.

If you prepare now with a flexible way to observe your systems, your operations will continue to run smoothly, and your users will not be disappointed tomorrow.